Introduction

The study of post-medieval skeletal remains has been largely disregarded and, until the 1980s, excavation in post-medieval contexts were rarely undertaken in British archaeology (Roberts and Cox 2003, 300). Nevertheless, the skeletal remains of this period are exceptionally important for the understanding of changing diet and health in an increasingly industrialised Britain. The period is defined by a rising social divide between higher and lower status individuals, with the urbanisation of Britain leading to squalid, unhygienic conditions in an expanding urban environment (Webb et al. 2009, 170). The skeletal remains of the individuals that occupied this period have undergone varying degrees of examination and can provide some insight into how status influenced diet and health.

The health and survival of children across any population is the most demographically and sensitive indication of biocultural change (Roth 1992; Lewis 1999, 1) and can be used as an effective measure of health across past populations. Non-adults experience accelerated development of bone and dentition during early life (Scheuer and Black 2004, 149), and therefore, stress indicators affecting growth and metabolism are more commonly observed in young non-adult remains.

Diet, nutritional deficiency and stress

To better understand diet and nutritional deficiency in the context of socioeconomic status, metabolic disease and non-specific indicators of stress must be fully explored. Scurvy, rickets, cribra orbitalia, and dental enamel hypoplasia (DEH) (e.g. Newman and Gowland 2017; Hughs-Morey 2016) have been linked with skeletal stress induced by nutritionally deficient diets in childhood, and hence they will be discussed within a post-medieval context.

Nutritional rickets

Rickets is most commonly linked with a lack of sunlight exposure, rather than a dietary deficiency. Despite this, the consumption of breastmilk in infancy, and food sources high in vitamin D such as fatty fish and egg yolks (Holick 2006, 5) in later childhood are vital in areas of low sunlight exposure. If insufficient amounts of vitamin D are absorbed, mineralisation of the newly formed bone matrix is impaired, and the bones will subsequently become soft and are prone to bending due to weight-bearing in children (Ortner and Mays 1998, 52) (figure 1). The palaeopathological literature has cited nutritional deficiency as a significant factor in the skeletal manifestation of rickets in some rural post-medieval populations (Waters-Rist and Hoogland 2018; Watts and Valme 2018), but largely attributes air pollution (e.g. Mays et al. 2006, 362) and industrialised environments as the common cause of rickets in post-medieval urban populations. Despite the condition’s easy diagnosis in the skeleton, it acts as a poor indicator for status and diet and is often termed a classless disease affecting both low and high-status individuals variably (Cox 1996, 23).

Scurvy

Scurvy occurs due to a deficiency in vitamin C (ascorbic acid) in the body and is considered a metabolic disease of the bone, affecting remodelling patterns (Ortner 2003, 383; Brickley et al. 2020, 41). Vitamin C is required in the body to maintain the formation of collagen 1, the structural protein used to synthesise connective tissue, and a large constituent of bone (Sandhu et al. 2012, 153), and can only be obtained through dietary sources (Armelagos et al. 2014, 10). The London Bills of Mortality indicated an increase in the disease during the 17th century, and a high prevalence of the disease is often observed in sailor (Sinnot 2015) and famine (Geber 2012) populations.

Cribra orbitalia

Osteologically, cribra orbitalia is characterised by the pitting of the orbital roofs and the frontal bone of the skull (White and Folkens 2005, 320), formed as a result of hyperplasia, or thinning of the outer cortex (Gowland and Redfern 2010, 22). Walker et al. (2009, 112) have proposed megaloblastic anaemia or the deficiencies of vitamin B9 and B12 as the likely cause of cribra orbitalia in most archaeological populations, and this is now widely accepted amongst the osteoarchaeological community.

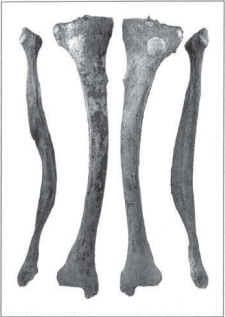

DEH

Dental enamel hypoplasia (DEH) is a condition characterised by fluctuating growth in the dentition. The pathology can be identified macroscopically by lines, pits, and grooves in the dental enamel (figure 2), reflecting reduced mineralisation during childhood (Hillson 2005, 168). DEH does not have a specific aetiology and is considered a non-specific indicator of early childhood stress.

Diet in post-medieval Britain

There was a significant disparity between the post-medieval childhood and adult diet of the upper- and lower-class members of society. The urban diets of the lower classes were often rich in carbohydrates and fats, but low in protein, and deficient in vitamins, lacking a variety or quality of food (Wohl 1983, 13; Clayton and Rowbotham 2009, 1238). Bread was a staple part of the diet (Fischler 1987, 10) and was becoming increasingly cariogenic with the introduction of new milling techniques (Mant and Roberts 2015, 19). In contrast, the middle and upper classes had a greater access to protein-rich sources of food, in addition to a greater variety of vegetables and fruits (Dhaliwal et al. 2019, 586). However, diet and nutritional deficiency during the post-medieval period are difficult to ascertain from osteological evidence alone. Mant and Roberts (2015), established no statistical difference between the crude prevalence rates of caries between lower (St. Brides Church) and higher status individuals (Chelsea Old Church), with a generally high prevalence for dental cavities being observed across populations, regardless of status.

Prior to 1764, refined sugar and white milled bread was almost exclusively consumed by high-status populations (Thirsk 2007), and the lower classes could not afford to buy such products. However, the sugar act (Renshaw and Powers 2016, 164), and later removal of import duties on sugar in 1845, in addition to the repeal of the corn laws in 1846 (Corbett and Moore 1976, 401), led to a significant reduction in sugar costs. This enabled increased access by lower-class individuals. Despite this, it is evident that sugar was consumed in different contexts depending on the socioeconomic status of an individual, and although similar caries rates are observed between low and high-status populations, this is likely due to varying patterns of dietary consumption. A high proportion of the calorific intake of the urban lower classes consisted of recycled tea with sugar (Henderson et al. 2013, 253), whilst high-status individuals consumed tea for leisure (Drummond and Wilbraham 1957), rather than as a dietary necessity. Additionally, the upper classes had access to a wider variety of refined sugar products including, jam, treacle and chocolate (Corbett and Moore 1976) that were becoming increasingly popular in nineteenth-century Britain.

Post-medieval intrauterine stress

The maternal diet of poorer populations in the urban environments of London, Manchester, and Leeds was likely deficient in vitamin C, D B12 and iron, due to having limited access to fresh meat and vegetables compared to higher status individuals (Mant and Roberts 2015, 5). Furthermore, it was customary for men to receive larger portions of food than women, and in low-status families this could result in women not receiving any meat, subsisting off only bread, weak tea, and scraps (Wohl 1983, 12). This would have a detrimental effect on maternal and foetal health if a poor diet was sustained throughout pregnancy, causing intrauterine stress. Hodson and Gowland (2020,39) have highlighted the dire consequences of poor maternal diet on the health of foetal, perinates and infants in low-status post-medieval London populations, which is further reflected in the wider palaeopathological literature (Lewis and Gowland 2007; Lewis 2013, 3; Newman et al. 2019). Poor maternal and infant health can be prominently observed in the post-medieval, low-status London population of Cross Bones, with a high prevalence of DEH on the deciduous dentition in non-adults being determined (Newman and Gowland 2017, 226). Furthermore, the high mortality rate of perinates, also likely reflects poor maternal health (ibid, 224). Skeletal stress and metabolic disease in infancy would have been further exacerbated by pollution and other environmental factors (Henderson 2013, 267; Lewis 2003, 6), and ultimately this could lower the immune system of an infant exposing them to infection, leading to an increased risk of child mortality, or early adult mortality in the case of survival into adulthood.

The maternal diets of some high and middle-status individuals were also likely deficient in vitamins. Notably, Lewis (2002) has identified DEH on the deciduous dentition of non-adults before the age of 6 months in the industrial middle-status London cemetery population of Spitalfields which could be associated with poor maternal diet. This perhaps reflects a social choice to consume large quantities of meat (Fox 2013, 174), a sign of higher social status, whilst consuming little vegetables. However, it should be acknowledged that intrauterine stress could also indicate exposure to pathogens in utero due to maternal infection, rather than a dietary deficiency. Some women regardless of status, were anaemic during pregnancy, as the use of iron supplements was not yet practiced (Molleson and Cox 1993, 43). Furthermore, several pregnancies in quick succession would likely deplete maternal iron stores having detrimental effects on maternal and infant health.

Cultural feeding practices

By the nineteenth-century, breastfeeding had declined, with bottle feeding becoming more popular within lower status populations (Henderson et al. 2013, 257). This likely reflects the increased employment of women in industrial manufacturing. However, the poor diet, nutrition and living conditions of low-status mothers could have also led to an inability to produce sufficient nourishment for their children (Drummond and Wilbraham 1991, 373). The use of cow’s milk to feed infants would have a significant detrimental effect on health. This is reflected in the rural post-medieval Dutch population of Beemster (Waters-Rist and Hoogland 2018), and a high prevalence of rickets was observed despite widespread access to sunlight. It is therefore apparent that in this population a nutritionally deficient diet was primarily responsible for skeletal rickets. According to the clinical literature (Willets et al. 1999), the practice of feeding cow’s milk to infants can cause gastrointestinal infection. This can subsequently lead to rectal bleeding and a loss of iron stores in the body, contributing to iron deficiency anaemia (Ziegler 2011). In contrast, the consumption of breastmilk, as appose to cow’s milk, would have provided significant protection against parasitic infection and diarrhoea (Abdel-Hafeez 2013) that was a prominent cause of mortality in infants during the industrial era (Davenport 2019, 197). This is particularly relevant when discussing low-status urban populations, who resided in unsanitary and cramped living conditions (Luckin and Mooney 1997, 46), being predisposed to the spread of parasitic infection. The exclusive use of cow’s milk to feeds infants would, therefore, have been detrimental to health, with poor nutrition in early life increasing the risk of metabolic disease, stress, and early mortality.

Cultural feeding practices amongst the upper echelons of society could have also led to nutritionally deficient diets amongst infants and would account for skeletal stress observed in higher status populations. In eighteenth-century Britain, it was popular to feed infants pap (figure 3), which consisted of flour or breadcrumbs mixed with milk or water, and such a diet would have been seriously lacking in minerals such as, iron and calcium (Cox 1996, 22). It is therefore reasonable to assume that such practices could influence the manifestation of nutritional rickets, cribra orbitalia and scurvy, as observed in the high-status London population of Chelsea Old Church (Newman and Gowland 2017). Furthermore, up until the late eighteenth-century the wealthy often employed wet nurses (Fildes 1986, 121), so new-born babies would have been deprived of maternal colostrum, which is the breast milk produced by a new mother. Colostrum is responsible for destroying infective organisms in the infant’s gut, therefore maintaining a strong immune system, whilst also providing concentrated amounts of nutrients, including B12 and calcium (Uruakpa 2002, 756). These practices could have a detrimental effect on an infant’s health, facilitating skeletal stress and metabolic conditions, in addition to increasing susceptibility to infectious disease.

Middle-status individuals evidently practiced some of the fashionable feeding practices of the time, with both the St. Benet Sherehog and Bow Baptist middle-status London populations displaying high prevalence rates for scurvy, rickets and DEH (Newman and Gowland 2017, 224). Additionally, in the St. Benet Sherehog population metabolic disease was most prevalent between ages 1 and 5, suggesting a nutritionally deficient diet, and the onset of stress during weaning (ibid, 224). Despite this, more middle-status and high-status women would have breastfed children, having more available time, and sufficient nutrition compared to low-status women (Hughes‐Morey 2016, 131). Regardless of social status, poor infant health as reflected through skeletal stress, was common amongst Post-medieval non-adults of the period. However, the evidence suggests the manifestation of skeletal stress and metabolic disease was the result of different feeding practices and diets that were strongly linked to socioeconomic status.

Isotopes and early diet

Nutritional deficiency caused by Cultural feeding practices and poverty can be observed through isotopic sequential analysis of the dentition in post-medieval populations. Henderson et al. (2014) used nitrogen-15 and carbon-13 ratios from the dentine of post-medieval non-adult individuals from the low-status population of Southwark, London, revealing a weaning age of around 6 months. From the isotopic data at least, it appears that the eighteenth-century fashion of feeding dry food to infants from birth (Fildes 1986, 121) was not widely practiced in this population. In contradiction to this, an isotopic study conducted by Nitsch et al. (2011) determined a variety of feeding practices in the middle-status London Spitalfields population, with some individuals receiving no breastmilk, whilst others were breastfed for up to 1.5 years of age. This reflects the broad range of cultural feeding practices, that were undertaken by different socioeconomic groups. It should be acknowledged that currently few isotopic studies exist outside of the London geographic area, and it is not apparent whether infant feeding practices were the same across Britain.

Tuberculosis, diet and health

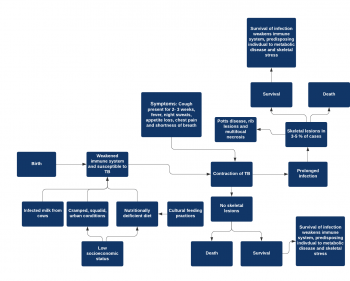

Tuberculosis (TB) is a chronic infection, caused by the Tubercle bacilli bacteria, with initial infection (usually of the lungs) leading to pulmonary lesions (Russel 2011, 252). The severity and duration of tuberculosis are linked with nutrition and diet which are fundamental in strengthening the immune system (Scrimshaw et al. 1959, 367; Katona and Katona-Apte 2008, 1582) against infectious diseases (figure 4). It can be assumed that lower status individuals, especially adults had an overall poorer diet (Mant and Roberts 2015, 4) than their middle and high-status equivalents. The overcrowding in urban areas such as London, Manchester, and Leeds would have increased the risk of droplet infection (Müller et al. 2014, 4) compared to rural areas, and it can be assumed that low-status urban dwellers were most susceptible to infection. This theory is partially supported by the limited osteoarchaeological evidence, with London urban populations generally displaying skeletal evidence for the pathology, whilst rural populations do not (Roberts and Cox 2003, 339).

Limitations of the evidence and possible future research

It is worth emphasising that the skeletal remains present a somewhat fragmentary source of evidence. The period saw the establishment of the middle-classes, being made up of previously working-class citizens. Therefore, skeletal stress and metabolic disease manifested in middle-status populations could have been influenced by poor nutrition and living conditions in infancy if an individual was born into a working-class family (Lewis 2002, 217).

The relationship between diet and socioeconomic status is evidently difficult to determine in post-medieval contexts with multiple factors influencing the manifestation of skeletal stress and metabolic disease. Interpretation of this osteological data is made more difficult by the osteological paradox and individuals with no skeletal evidence of pathology may represent the “unhealthiest”, who died before skeletal manifestations could occur (Wood 1992; Geber 2015, 16). Furthermore, the strength of the immune system is strongly linked with nutritional intake, and therefore low-status individuals, who were malnourished in utero and infancy would be less likely to develop chronic skeletal lesions, succumbing to acute disease (Lewis 2003, 3; Ortner 2003, 115; Newman and Gowland 2019, 117). High prevalence for pathologies within population may therefore reflect healthier individuals, making the palaeopathological record difficult to interpret. Despite this, young mortality age is still a good indicator of health within a population (Roberts and Cox 2003, 303), and although it cannot directly be linked with diet without the presence of skeletal lesions, it can be assumed that nutritional deficiency often played a role in mortality (Hodson and Gowland 2020, 40), particularly in non-adult individuals. This theory is supported by the increased non-adult mortality rate cited within most low-status post-medieval populations (Roberts and Cox 2003, 303) who were often nutritionally deficient.

Emerging clinical (Barker 2012, 187) evidence suggests that intergenerational health significantly affects the manifestation of skeletal stress and metabolic disease, and in future research, this should be applied to palaeopathological contexts (Gowland 2015, 531). Future work on post-medieval populations would benefit from isotopic analysis of northern skeletal remains, therefore, helping to determine dietary practices in northern England, of which little documentary evidence exists. If non-adult dentition was utilised the infant feeding practices of northern mothers could be determined and compared to the London data available.

Concluding thoughts

It is evident socioeconomic status had a significant influence over diet and health in post-medieval Britain, with maternal diet influencing the health of an individual as early as pre-birth. However, the impact of cultural practices and environmental conditions in urbanised areas has resulted in skeletal stress being prevalent in non-adult individuals regardless of socioeconomic status. Although it should be noted that low-status populations would have received poor nutrition throughout life, and due to their cramped, squalid, living conditions were more susceptible to infectious diseases such as tuberculosis. This is evident from the high mortality rate of low-status non-adults suggesting many infants died of acute diseases, without developing skeletal lesions indicative of chronic infection. Therefore, it is evident that the independent impact of status on diet and health cannot be determined from the osteological evidence alone, and future research must also utilise documentary and isotopic evidence to create a more complete palaeopathological picture.